50Hz vs 60Hz: Understanding the Key Differences in Power Frequencies

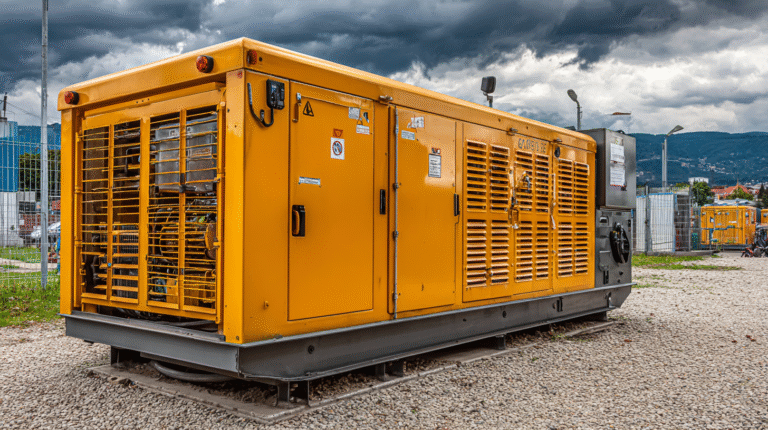

So, you’re curious about why the power coming out of your wall socket hums at either 50hz or 60hz times a second? It’s not just some random number; it’s a whole story involving history, engineering choices, and even a bit of a rivalry. Think of it like different countries using miles versus kilometers – it just depends on where you are and how things developed. In this guide, we’ll explore the 50hz vs 60hz debate, breaking down what makes these frequencies different, how they affect things like motors and lights, and why we ended up with two main standards instead of just one. Even something like a Diesel Generator is designed with these frequencies in mind.

Key Takeaways

- The main difference between 50Hz and 60Hz power is the speed at which the alternating current cycles, affecting motor speeds and some equipment performance.

- Historically, 60Hz became the standard in North America largely due to Westinghouse’s choices, while 50Hz became dominant in Europe and other regions, influenced by companies like AEG.

- While 60Hz systems can offer slightly better efficiency for long-distance power transmission and support faster motor speeds, 50Hz systems are perfectly adequate and often paired with higher voltages.

- Many appliances can work on either frequency, but some might not perform optimally or could even be damaged if used on the wrong frequency without a converter.

- The choice of frequency impacts the size of transformers and the potential for noticeable light flicker, with 50Hz historically being a compromise to reduce flicker compared to earlier lower frequencies.

1. Historical Adoption Of 50Hz vs 60Hz

Back in the day, when electricity was just starting to get going, there wasn’t really a set standard for frequency. Lots of different frequencies were floating around, and it was kind of a free-for-all. Think about it, different towns, even different companies, were using all sorts of frequencies, like 25 Hz, 40 Hz, and even higher ones like 133 Hz. It made things pretty complicated when you wanted equipment to work across different places.

Things started to shake out around the turn of the 20th century. In Europe, companies like AEG began leaning towards 50 Hz. They found it was a decent balance for things like lighting and motors, especially with the technology they had back then. This choice really took hold, and soon, much of Europe, Asia, Africa, and Australia were on the 50 Hz track. It was partly because they looked to European developments as a model when building their own grids.

Meanwhile, over in the United States, Westinghouse Electric Corporation was pushing for 60 Hz. This decision, influenced by factors like Nikola Tesla’s work and the needs of early electrical systems, set America on a different path. The two systems, 50 Hz and 60 Hz, weren’t compatible, so countries tended to stick with one or the other based on who they were getting their technology from.

It’s interesting how these early choices, sometimes made with limited information, ended up shaping global power systems for decades. It wasn’t until after World War II that things really started to standardize more widely, partly driven by the boom in consumer electronics and the need for international trade in electrical gear. You can see how important standardization was for things like diesel generator size and equipment compatibility.

The early days of electrification were marked by a wide variety of frequencies, a situation that gradually consolidated into the 50 Hz and 60 Hz standards we see today. This evolution was driven by technological capabilities, economic considerations, and the influence of major electrical manufacturers.

Here’s a quick look at how things started to settle:

- Early 1900s: Many different frequencies in use globally.

- Around 1900-1910: European industry largely adopts 50 Hz.

- Early 20th Century: Westinghouse champions 60 Hz in the US.

- Post WWII: Increased standardization and international trade solidify the two main frequencies.

2. Key Differences Between 50Hz and 60Hz

So, what’s the big deal between 50Hz and 60Hz power? It really boils down to how fast the electricity is cycling, or oscillating, per second. Think of it like the tempo of a song; one is a bit faster than the other. This difference, while seemingly small, has ripple effects on how electrical systems are designed and how devices perform.

The most noticeable difference is that 60Hz systems deliver power 20% faster than 50Hz systems. This means motors and other equipment designed for 60Hz will generally run at a higher speed. However, it’s not as simple as just plugging a 50Hz device into a 60Hz outlet and expecting a speed boost; many devices aren’t built to handle that change without issues.

Here’s a quick rundown of some key distinctions:

- Speed of Oscillation: 50Hz means 50 cycles per second, while 60Hz means 60 cycles per second. This is the core difference.

- Equipment Design: Devices are often optimized for a specific frequency. Running a 50Hz appliance on 60Hz power might make it run faster but could also cause overheating or premature wear if not designed for it. The reverse can also be true.

- Transmission Efficiency: Generally, 60Hz can be slightly more efficient for transmitting power over very long distances, though this is a complex factor influenced by many variables.

- Motor Speed: Motors designed for 60Hz will naturally spin faster than identical motors designed for 50Hz.

While there are technical differences, for the average person using everyday appliances, the frequency difference often doesn’t matter much. Most modern electronics are designed to work within a range of frequencies or come with power adapters that handle the conversion. The real impact is felt more by manufacturers and utility companies when designing and maintaining the grid. It’s why you might need a frequency converter when traveling between regions with different standards.

3. Efficiency and Power Transmission

When we talk about how electricity gets from the power plant to our homes, the frequency, whether it’s 50Hz or 60Hz, plays a role. Think of it like this: higher frequencies can mean smaller, lighter transformers for the same amount of power. This is because the magnetic core inside them doesn’t need to be as big. It’s a bit of a trade-off, though.

While smaller transformers are cheaper and easier to handle, higher frequencies can lead to more energy loss over long power lines. This is due to something called the skin effect, where electricity tends to flow more on the surface of a conductor at higher frequencies, effectively making the wire seem thinner and increasing resistance. So, for really long distances, lower frequencies like 50Hz or 60Hz are generally preferred because they don’t suffer as much from these line losses.

Here’s a quick look at how frequency can affect things:

- Transformer Size: Higher frequency = smaller transformer for the same power.

- Line Losses: Higher frequency = potentially more energy lost over long distances.

- Equipment Design: Different frequencies require different designs for motors and other electrical gear.

The choice of frequency for a power grid is really a balancing act. Engineers have to weigh the benefits of smaller equipment against the costs of increased energy loss during transmission. It’s not a simple case of one being universally better than the other; it depends on what you’re trying to achieve.

For instance, in places like aircraft or submarines, where space and weight are super important, you’ll often find higher frequencies like 400Hz. This allows for much smaller and lighter electrical components. But you won’t see 400Hz being used to send power across a whole country because the losses would be way too high. It’s usually kept within a vehicle or a building. So, while 50Hz and 60Hz are the global standards for grid power, other frequencies have their own specific uses where their unique properties are a big advantage.

4. Impact on Motor Speed and Performance

So, how does the frequency of your power supply actually mess with motors? It’s pretty straightforward, really. The speed a motor spins at is directly tied to the frequency of the electricity feeding it. Think of it like this: the alternating current is pushing and pulling the motor’s rotor back and forth. A higher frequency means those pushes and pulls happen more often, making the motor spin faster.

A 60Hz system will generally make a motor run about 20% faster than the same motor on a 50Hz system. This isn’t some minor tweak; it’s a noticeable difference that affects how equipment performs. For example, a motor designed for 60Hz might run a bit sluggishly if you plug it into a 50Hz supply, and conversely, a 50Hz motor might overheat or struggle if forced to run on 60Hz without proper adjustments.

Here’s a quick look at how frequency affects synchronous motor speed:

| Frequency (Hz) | Poles | Synchronous Speed (RPM) |

|---|---|---|

| 50 | 2 | 3000 |

| 50 | 4 | 1500 |

| 60 | 2 | 3600 |

| 60 | 4 | 1800 |

This relationship is governed by a simple formula: Synchronous Speed (RPM) = (120 * Frequency) / Number of Poles. So, if you double the poles, you halve the speed, and if you change the frequency, the speed changes proportionally. It’s why manufacturers have to be careful about which frequency their motors are designed for, especially when exporting products. You wouldn’t want your new appliance to run slower or faster than intended, right? It’s also why you might need a frequency converter if you’re bringing equipment from a country with a different standard, like using a 60Hz motor in a 50Hz country.

The choice of frequency isn’t just about motor speed; it also influences the design and efficiency of electrical equipment. Manufacturers have to balance the benefits of higher speeds with the potential for increased losses or the need for more robust designs. It’s a bit of an engineering balancing act.

For specialized applications, like in aircraft, higher frequencies such as 400Hz are used. This allows for smaller, lighter motors and transformers, which is a big deal when space and weight are at a premium. However, these high frequencies aren’t practical for long-distance power transmission due to increased line impedance. So, while 400Hz is great for a plane, it’s not going to power your whole city. If you’re looking into generators, it’s worth considering the intended use and perhaps adding a buffer to your power needs, like consulting a marine electrician for offshore setups [199b]. It really pays to get the specs right for your specific situation.

5. Voltage Standards Associated With Frequencies

When we talk about 50Hz versus 60Hz power, it’s not just the frequency that differs. The voltage levels typically paired with these frequencies also tend to vary across different regions. It’s a bit like how different countries have different plug types – it all comes down to historical choices and what made sense at the time for equipment and safety.

Generally speaking, areas that use a 50Hz standard often operate with higher voltages, typically in the 220-240V range. Think of most of Europe, the UK, and large parts of Asia and Africa. On the other hand, countries that adopted the 60Hz standard, like the United States and Canada, usually stick to lower voltages, commonly between 100-127V. Japan is an interesting case, using both 50Hz and 60Hz in different parts of the country, with associated voltage differences too.

Here’s a quick look at the common pairings:

- 50Hz: Often associated with 220-240V (e.g., Europe, UK, Australia, most of Asia and Africa).

- 60Hz: Typically paired with 100-127V (e.g., North America, parts of South America, Japan).

It’s important to remember these are general trends, and there can be exceptions or variations within countries. The choice of voltage alongside frequency was influenced by factors like the intended use of electricity, the distance power needed to be transmitted, and the types of appliances that were being developed. For instance, higher voltages can be more efficient for transmitting power over long distances, but they also require more robust insulation and safety measures. This historical divergence in voltage and frequency standards is a key reason why electrical equipment isn’t always directly interchangeable between regions without adapters or converters.

The decision to standardize on specific frequencies and voltages wasn’t a single, global event. It evolved over time, driven by the companies that built the early electrical infrastructure and the equipment that consumers would use. These choices, made decades ago, continue to shape how we power our world today, influencing everything from the design of our appliances to the way our power grids are managed. It’s a fascinating look at how early engineering decisions have such lasting impacts.

Understanding these differences is super important if you’re traveling or importing electronics. You’ll often see dual-voltage devices, but it’s always good to check the label to make sure your gadgets can handle the local power grid standards.

6. Global Distribution of Frequencies

It’s pretty wild when you think about it, but the frequency of the electricity powering our homes and gadgets isn’t the same everywhere. Back in the day, when electrical grids were first being set up, there wasn’t a clear winner. Lots of different frequencies were tried out, and honestly, some of them seem pretty strange now.

For instance, early on, you had places using 133 Hz, 125 Hz, and even 66.7 Hz. It was kind of a free-for-all as different companies and countries experimented. The US ended up leaning towards 60 Hz, largely thanks to Westinghouse, while Europe gravitated towards 50 Hz, with companies like AEG and Oerlikon playing a big role. This split basically set the stage for the two dominant frequencies we see today.

So, where do you find what? Well, it’s a bit of a map:

- 60 Hz: You’ll find this standard in North America (the US and Canada, mostly), parts of South America, and a few other scattered regions.

- 50 Hz: This is the big one globally, covering most of Europe, the UK, Russia, Australia, and a huge chunk of Asia and Africa.

It’s not just a simple split, though. Japan is a really interesting case, actually using both 50 Hz and 60 Hz depending on the region. Tokyo and the surrounding areas run on 50 Hz, while Osaka and western Japan use 60 Hz. This historical quirk comes from how their early power systems were developed by different companies.

The reason for these differences often boils down to historical choices made during the early days of electrification. Different inventors, companies, and national priorities led to the adoption of various frequencies, and once a system is in place, changing it is a massive undertaking. It’s easier to build new systems that match existing ones in a region.

There were also periods where other frequencies were common for specific uses. For example, 25 Hz was used for some industrial applications and early electric railways because it was better suited for motor operation at the time. Even 40 Hz popped up in places. But over time, the advantages of 50 Hz and 60 Hz for general power distribution, especially concerning efficiency and compatibility with lighting, led to them becoming the de facto global standards.

7. Early Frequency Standards and Experiments

Back in the day, when electricity was just starting to get going, things were pretty wild. There wasn’t just one or two frequencies; it was a whole mix-and-match situation. Imagine trying to plug in your toaster and finding out it only works at, say, 41 and two-thirds Hertz! It was a bit like that.

Companies were trying out all sorts of frequencies, and different places settled on different ones. For example, some early systems in the UK used 133 Hz for lighting, while others, like the Stanley-Kelly Company, were using 66.7 Hz. Even General Electric had a hand in this, with systems running at 125 Hz, 62.5 Hz, and even 40 Hz. It really depended on what the engineers thought was best at the time, often balancing needs for lighting, motors, and how far the power had to travel. The lack of a universal standard made trading electrical equipment a real headache.

Here’s a look at some of the frequencies floating around in the early days:

- 133 Hz: Used in some UK and European single-phase lighting systems.

- 83.3 Hz: Ferranti in the UK used this for their Deptford Power Station.

- 40 Hz: A popular choice for some, with demonstrations like the Lauffen-Frankfurt transmission using it.

- 25 Hz: Often chosen for motor applications and industrial use, like at Westinghouse’s Niagara Falls project.

The push for standardization really picked up steam as more power was generated and distributed. It wasn’t just about making things work; it was about making them work together across different companies and regions. This early experimentation, while messy, laid the groundwork for the systems we rely on today.

It’s interesting to see how some of these frequencies persisted for quite a while. Even into the mid-20th century, you could find places still operating on frequencies like 25 Hz or 40 Hz, alongside the emerging 50 Hz and 60 Hz standards. This period of experimentation was vital for figuring out what worked best for transmitting and using electrical power, and you can read more about the early experiments with single-phase electric power from as far back as 1897.

8. The Role of Nikola Tesla

Nikola Tesla, a name synonymous with electrical innovation, played a significant part in the AC versus DC debate and, by extension, the frequency standards we use today. While Thomas Edison championed direct current (DC), Tesla was a fervent advocate for alternating current (AC). His groundbreaking work on the rotating magnetic field was the bedrock for AC motors and generators.

Tesla’s experiments often explored frequencies higher than the eventual 50Hz or 60Hz standards. He even demonstrated synchronized clocks using line frequency at the 1893 Chicago World’s Fair, showcasing the potential for precise timekeeping via the power grid. This concept is still relevant today, as grid operators subtly adjust frequency to keep clocks accurate.

It’s interesting to note that while Westinghouse, a major backer of Tesla’s work, eventually settled on 60Hz in North America, partly to better serve arc lighting needs at the time, Tesla’s induction motor designs actually performed well at lower frequencies. This led to some interesting choices in early power system development.

The early days of electricity were a bit of a wild west, with different companies and inventors pushing their own ideas. It wasn’t a clear path to the standards we have now; there were a lot of competing interests and technical considerations that shaped the outcome.

Here’s a look at some of Tesla’s contributions and their impact:

- AC Motor Principles: Developed the induction motor, which relies on rotating magnetic fields.

- Frequency Synchronization: Showcased the use of AC frequency for timekeeping.

- Advocacy for AC: His persistent promotion of AC systems was key to their widespread adoption over DC.

Tesla’s vision and inventions were instrumental in establishing the AC power systems that form the backbone of our modern electrical infrastructure. His work directly influenced the development of the AC machinery we rely on daily.

9. Westinghouse vs. General Electric

Back in the day, when AC power was still finding its feet, there was a bit of a showdown between Westinghouse and General Electric. It wasn’t just about who could build the biggest power plant; it was about which frequency would become the standard. Westinghouse, leaning on Nikola Tesla’s work, was pushing for 60 Hz. They figured it was a good balance for both lighting and the newfangled induction motors they were developing. This frequency offered a decent compromise for early electrical needs.

General Electric, on the other hand, had ties to European companies and initially experimented with 50 Hz, especially for projects like the one in Redlands, California. However, to compete with Westinghouse’s growing market share, GE eventually switched its major projects to 60 Hz as well. It’s kind of funny to think about how these big decisions, made over a century ago, still affect us today. It really shows how much influence early engineering choices have.

It wasn’t always a clear path to 60 Hz, though. The Niagara Falls project, a huge deal at the time, ended up using 25 Hz because the turbine speeds were already set. This low frequency became a North American standard for a while, especially for heavy motor loads. Other frequencies like 40 Hz also had their moments, with systems built in places like the UK and Italy.

Even as late as 1946, you could find regions using frequencies like 25 Hz or 40 Hz, alongside the emerging 50 Hz and 60 Hz standards. It was a real mix-and-match situation for a while before things really settled down. If you’re looking at backup power, understanding these historical choices can even inform decisions about generators, like choosing a reliable Vanguard generator for your home.

Here’s a quick look at some of the frequencies that were in play:

- 133 Hz: Common for early lighting systems.

- 60 Hz: Westinghouse’s preferred frequency, eventually adopted widely.

- 50 Hz: European standard, also adopted by GE for some projects.

- 25 Hz: Used for large industrial loads and early AC systems like Niagara Falls.

- 40 Hz: A compromise frequency used in some early networks.

The competition between these early electrical giants wasn’t just about technology; it was about market dominance and setting the stage for the future of electricity distribution. The choices made then, often driven by a mix of technical merit and business strategy, shaped the electrical landscape we use every day.

10. Frequency Converters and Compatibility

So, you’ve got different electrical systems running on different frequencies, like 50Hz and 60Hz. What happens when you need them to talk to each other? That’s where frequency converters come in. These are basically devices that change the frequency of electricity. Think of them like a translator for power.

The main challenge is that generators are designed to spin at specific speeds to produce a certain frequency. The impact of frequency on generators is pretty direct; changing the frequency means you’d have to change how fast the generator spins, or how many magnetic poles it has. It’s not a simple flip of a switch.

Here’s a quick look at why this matters:

- Generator Design: The rotational speed (RPM) of a generator is directly tied to the frequency it produces and the number of poles in its design. For example, a 60Hz generator with 4 poles spins at 1800 RPM, while a 50Hz generator with the same poles spins at 1500 RPM.

- Equipment Compatibility: Appliances and motors are often built to work best at a specific frequency. Running a 60Hz motor on 50Hz power might make it run slower, while a 50Hz motor on 60Hz power might run faster, potentially causing issues.

- Grid Interconnection: Connecting two power grids with different frequencies is tricky. They need to be synchronized, which usually requires specialized equipment like rotary converters or modern static inverters. These can be expensive and also lose some energy during the conversion process.

Historically, converting between frequencies like 25Hz and 60Hz was quite a task. The machines needed to do this were often large and costly. Even today, while technology has improved, converting power frequencies isn’t always straightforward or cheap. For instance, if you’re looking at buying a generator, especially from overseas, it’s smart to check its specifications carefully to ensure it matches your local grid requirements. Understanding the rated vs. maximum power is just one part of making sure it’s the right fit.

While high frequencies like 400Hz are great for making things like transformers and motors smaller and lighter, especially for aircraft, they aren’t practical for long-distance power transmission. The increased impedance at higher frequencies makes it difficult to send power over long lines. So, these high-frequency systems are usually kept within a single building or vehicle.

11. Lighting Flicker Considerations

When we talk about AC power frequencies, one of the things that really comes up is how it affects lighting. Back in the day, especially with older types of lights like incandescent or arc lamps, the frequency of the power supply made a big difference in how steady the light appeared. If the frequency was too low, the light could seem to flicker. This is because the light output changes as the AC current goes through its cycle.

The frequency of the power grid is a compromise between many factors, and lighting is definitely one of them. For instance, early on, some systems used frequencies around 25Hz or 40Hz. While lower frequencies could be good for certain things like motors or long transmission lines, they often caused a noticeable flicker in lights. People found this annoying, and it was a reason why frequencies were eventually standardized higher. For example, some European systems moved from 40Hz up to 50Hz partly to reduce this flicker effect.

Here’s a general idea of how frequency relates to lighting perception:

- Incandescent Lamps: The filament heats up and cools down with each cycle. Lower frequencies mean more time for cooling, leading to more noticeable brightness changes.

- Arc Lamps: These are even more sensitive. Lower frequencies could cause them to buzz audibly and flicker more intensely.

- Modern Lighting (LEDs): While LEDs are different, they can still be affected. If the internal electronics of an LED fixture aren’t designed well, they might try to sync with the AC frequency, and a mismatch can still cause a flicker, though it’s usually less pronounced than with older technologies. You can sometimes see this flicker if you film modern lights with a high-speed camera, or if the light’s internal frequency doesn’t match the power grid frequency.

The goal was always to find a frequency that worked well enough for most applications. Too low, and you get flicker and audible hums from lights and motors. Too high, and you start running into other issues like increased losses in transformers and transmission lines. It’s a balancing act.

So, while we don’t often notice flicker from our standard 50Hz or 60Hz power today, it was a significant factor in deciding which frequencies would become the global standard. It’s a good reminder that even seemingly small technical details can have a big impact on our everyday experience.

12. Wire Management and Design Implications

When you’re setting up electrical systems, the frequency you choose, whether it’s 50Hz or 60Hz, actually has some knock-on effects on how you manage the wiring and design the overall setup. It’s not just about the power itself.

One thing to consider is the skin effect. This is where AC current tends to flow more on the outer surface of a conductor rather than through the middle. At 60Hz, this effect is a bit more pronounced than at 50Hz, especially with larger wires. This means that for high-current applications, the actual conductive area of the wire might be less than its total cross-sectional area. This can influence the size and type of conductors you need to use to carry the same amount of current efficiently.

Here’s a quick look at how frequency can affect conductor choices:

- Higher Frequency (60Hz): Can lead to increased resistance due to skin effect, potentially requiring larger or specially constructed conductors (like stranded or hollow wires) for high-current applications to compensate.

- Lower Frequency (50Hz): Generally experiences less skin effect, meaning a solid conductor might be more efficient for a given current, but you might need more copper overall for the same power delivery compared to a well-designed 60Hz system.

- Voltage Standards: Different frequencies are often associated with different voltage standards. For example, 60Hz is common in North America with voltages like 120V and 240V, while 50Hz is prevalent in Europe and other regions with voltages like 230V. These voltage differences directly impact wire sizing and insulation requirements.

The choice of frequency can subtly influence the physical layout and material selection for electrical infrastructure. While the difference between 50Hz and 60Hz might seem small, it can affect the efficiency of conductors, especially in large-scale power transmission where even minor losses add up. This means engineers have to think about conductor geometry and material properties more carefully at higher frequencies to maintain performance.

So, while most everyday appliances work fine on either frequency, the underlying infrastructure design, especially for high-power transmission lines and industrial equipment, does take these frequency-related electrical phenomena into account. It’s all about making sure the electricity gets where it needs to go with minimal fuss.

13. Industrial and Specialized Frequencies

While 50Hz and 60Hz are the big players for general power grids, the world of electricity gets a lot more interesting when you look at industrial and specialized uses. Think about it – not every application needs the same kind of power.

For instance, high frequencies like 400Hz are a big deal in aviation and military gear. Why? Because they let you make transformers and motors much smaller and lighter. This is a huge advantage when you’re dealing with aircraft or submarines where every ounce and inch counts. The trade-off is that these high frequencies can’t really be sent over long distances economically; they cause too much impedance. So, you usually find 400Hz systems contained within a single vehicle or building. It’s a neat example of how the specific needs of an application can dictate the power frequency used. You can find charts detailing global voltages and frequencies that show these differences, offering a comprehensive guide for international use.

Then there are the lower frequencies, like 25Hz or even 16.7Hz, which you’ll often see in railway systems. These frequencies are good for certain types of electric motors used in trains, especially older designs. Some railway networks even use frequencies like 16 2/3 Hz, which is a bit of an odd number but works well for their specific traction power needs. These systems might get their power from dedicated stations or through frequency converters that change the standard grid power.

Here’s a quick look at some of these specialized frequencies and their common uses:

- 400 Hz: Aircraft, spacecraft, military equipment, server rooms. Benefits include smaller, lighter components.

- 25 Hz: Some railway systems (like Amtrak in the US), industrial furnaces. Historically used for early AC motors.

- 16.7 Hz (or 16 2/3 Hz): Primarily used for electric railways in Europe (e.g., Germany, Austria, Switzerland).

It’s fascinating how different frequencies were experimented with in the early days of electricity. Before 50Hz and 60Hz became the standards, there were all sorts of frequencies in use, like 133Hz, 83.3Hz, and even 25Hz for various lighting and motor applications. This variety shows that the choice of frequency wasn’t always straightforward and often depended on the technology available and the specific problems engineers were trying to solve at the time.

So, while most of us are plugged into 50Hz or 60Hz, the electrical world is a lot more diverse, with specialized frequencies playing important roles in keeping everything from planes in the sky to trains on the tracks running smoothly.

14. Stability and Grid Load Effects

The frequency of the power grid isn’t just a number; it’s a really important indicator of how stable the whole system is. Think of it like the heartbeat of the electrical network. When the demand for electricity suddenly jumps up, or if a big generator goes offline unexpectedly, the grid’s frequency can dip. This happens because the generators are working harder to keep up, and their speed, which dictates the frequency, can slow down a bit. Conversely, if there’s a sudden drop in demand, the frequency can rise.

Maintaining a steady frequency is key to preventing blackouts. Grid operators use sophisticated systems to constantly monitor and adjust the output of generators to keep the frequency right where it should be, usually 50Hz or 60Hz depending on the region. This process is called frequency regulation.

Here’s a breakdown of how load affects frequency:

- Increased Load: When more electricity is being used than is being generated, the generators slow down, causing the frequency to drop. This is a sign the grid is under stress.

- Decreased Load: When less electricity is being used, the generators can speed up slightly, causing the frequency to rise.

- Sudden Disturbances: Major events like transmission line failures or generator trips can cause rapid and significant frequency deviations, which protective systems are designed to counteract.

Different frequencies can have slightly different impacts on grid stability. For instance, a 60Hz system might react differently to sudden load changes compared to a 50Hz system, though the underlying principles of balancing generation and load remain the same. The inertia of the rotating masses in generators plays a role in how quickly the frequency responds to disturbances. Newer systems with more inverter-based resources, like solar and wind farms, behave differently as they don’t have the same physical inertia. These systems need to be programmed to respond to frequency signals, much like traditional generators do, to help keep the grid stable. You can find more information on how transformers are affected by frequency on pages discussing transformer core losses.

The delicate balance between electricity supply and demand is constantly managed. Even small, temporary dips or spikes in frequency are normal, but large, rapid changes are a warning sign that the system is struggling to keep up. This is why grid operators are always working to ensure generation matches consumption in real-time.

15. Appliance Functionality Across Frequencies

So, you’ve got your power grid humming along at either 50Hz or 60Hz, and you’re probably wondering if your toaster or your fancy new blender cares which one it’s plugged into. For the most part, the answer is a resounding “not really.” Most modern appliances are designed with a bit of wiggle room, meaning they’ll happily chug along whether they’re getting 50 or 60 cycles per second. Manufacturers tend to design for the standard in their primary market, so if you’re in the US, your stuff is probably tuned for 60Hz, and if you’re in Europe, it’s likely 50Hz.

However, there are some nuances. Motors are a big one. A motor designed for 60Hz will spin about 20% faster than an identical motor designed for 50Hz. This can sometimes lead to slightly different performance characteristics. For example, a fan might move a bit more air at 60Hz, or a washing machine’s spin cycle could be a tad quicker. It’s usually not a deal-breaker, but it’s something to be aware of.

Here’s a quick look at how some common items might behave:

- Motors: As mentioned, they’ll run faster at 60Hz. This can sometimes mean a slight increase in efficiency or, conversely, more heat if the motor isn’t designed for the higher speed.

- Heating Elements: These are generally unfazed by the frequency difference. Whether it’s 50Hz or 60Hz, the resistance is the same, so they’ll produce heat pretty much identically.

- Electronics (like TVs, computers): Most modern electronics use power supplies that convert the AC power to DC very early on. The frequency of the AC input usually doesn’t matter much to these internal circuits, as long as the voltage is correct. They’re pretty adaptable.

It’s worth noting that older appliances, especially those with very specific motor designs or timing mechanisms, might be more sensitive to frequency changes. Think of old electric clocks that used the power frequency for their timing; these would run noticeably faster on 60Hz than on 50Hz.

When you’re dealing with appliances that are sensitive to frequency, or if you’re moving between regions with different standards, you might need a frequency converter. These devices can change the 50Hz power to 60Hz, or vice versa. They’re not super common for household use, but they exist, especially for specialized equipment. For most everyday items, though, the difference between 50Hz and 60Hz is pretty minor. It’s more about making sure the voltage matches when you’re traveling. If you’re setting up a new system, like a home battery setup, you’ll want to match the frequency to your local grid for compatibility with your existing appliances.

| Appliance Type | Behavior at 60Hz vs. 50Hz | Notes |

|---|---|---|

| Motors | Faster rotation | Potential for increased heat or efficiency |

| Heating Elements | Negligible difference | Resistance is the primary factor |

| Simple Electronics | Generally unaffected | Power supplies adapt to frequency |

| Older Clocks | Runs faster | Timing mechanisms are frequency-dependent |

16. The Future of Utility Frequencies

So, what’s next for the frequencies powering our world? Honestly, it’s not like we’re going to suddenly switch everything from 60Hz to 50Hz, or vice versa, overnight. The infrastructure is just too massive, and the cost of changing out every transformer, motor, and appliance would be astronomical. Plus, for the most part, the current frequencies work pretty well.

However, there are always niche situations and new developments. For instance, some specialized industrial applications or even certain types of electric vehicles might benefit from different frequencies. Think about things like high-speed rail or specific manufacturing processes that could be more efficient with a tailored frequency. It’s less about a global shift and more about localized optimization.

We’ve also seen the rise of HVDC (High-Voltage Direct Current) for long-distance power transmission. While not a frequency in the AC sense, it bypasses some of the issues associated with AC frequencies over long lines. It’s a different approach to moving electricity, and its role is likely to grow.

Here’s a quick look at why sticking with the current standards makes sense:

- Inertia and Stability: Existing grids have a certain inertia built into their frequency. Changing this could have unpredictable effects on grid stability, especially during disturbances.

- Equipment Compatibility: Billions of dollars worth of equipment worldwide is designed for either 50Hz or 60Hz. A universal switch would require a complete overhaul.

- Historical Precedent: The current frequencies are deeply embedded in our electrical history and standards. Major shifts are rare and usually driven by significant technological breakthroughs or economic necessities.

While the idea of a universal frequency might sound appealing for simplicity, the practicalities of global infrastructure mean that 50Hz and 60Hz are likely to remain the dominant utility frequencies for the foreseeable future. Any changes will probably be incremental and application-specific, rather than a wholesale replacement.

17. Understanding AC Waveforms

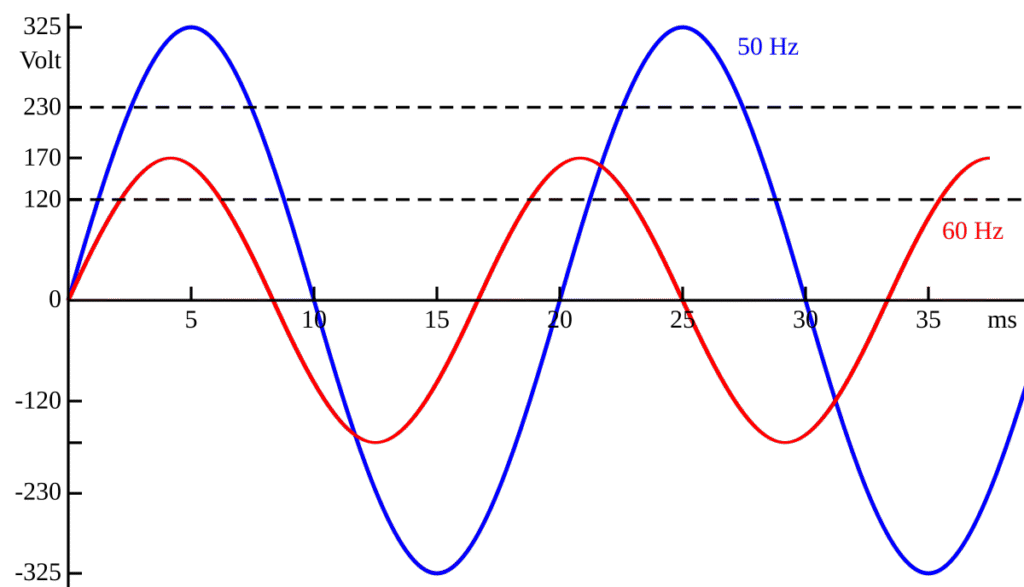

When we talk about 50Hz versus 60Hz power, we’re really talking about the frequency of the alternating current (AC) that flows through our electrical systems. Think of it like a wave. AC power isn’t a steady stream like DC; it cycles back and forth. The frequency tells us how many of these complete cycles happen in one second. So, 50Hz means 50 cycles per second, and 60Hz means 60 cycles per second.

The shape of this wave matters, and it’s usually a sine wave. This smooth, oscillating pattern is what allows transformers to work their magic, stepping voltages up or down for efficient transmission and safe use. The speed at which this wave oscillates directly impacts how devices designed for that frequency behave.

Here’s a quick look at how frequency relates to other electrical concepts:

- Cycles: One complete back-and-forth movement of the AC current.

- Hertz (Hz): The unit of measurement for frequency, meaning cycles per second.

- Period: The time it takes for one complete cycle to occur. For 60Hz, the period is about 16.67 milliseconds (1/60th of a second), while for 50Hz, it’s 20 milliseconds (1/50th of a second).

The visual representation of AC power is a sine wave. This wave’s frequency dictates how quickly the voltage and current change direction. Devices are engineered to operate within a specific frequency range, and deviations can affect their performance or even cause damage. It’s like trying to run a clock designed for 60 seconds per minute at 50 seconds per minute – things just won’t line up correctly.

Different frequencies can also have subtle effects on everyday things. For instance, older lighting technologies might show a noticeable flicker if the frequency is too low, which is why early European systems moved from 40Hz to 50Hz. This hum, often called “mains hum,” is a byproduct of the magnetic fields created by the AC current vibrating components in appliances.

The pitch of this hum will be different depending on whether the power is 50Hz or 60Hz. You can even use this hum as a forensic tool to verify the authenticity of audio recordings, as the hum’s frequency will match the local power grid’s frequency at the time of recording. For more on how these frequencies are used globally, check out the utility frequency page.

18. Power Factor and Inductance

When we talk about AC power, especially the difference between 50Hz and 60Hz, the concepts of power factor and inductance pop up pretty quickly. It’s not just about how fast the electricity is oscillating; it’s also about how efficiently that power is being used.

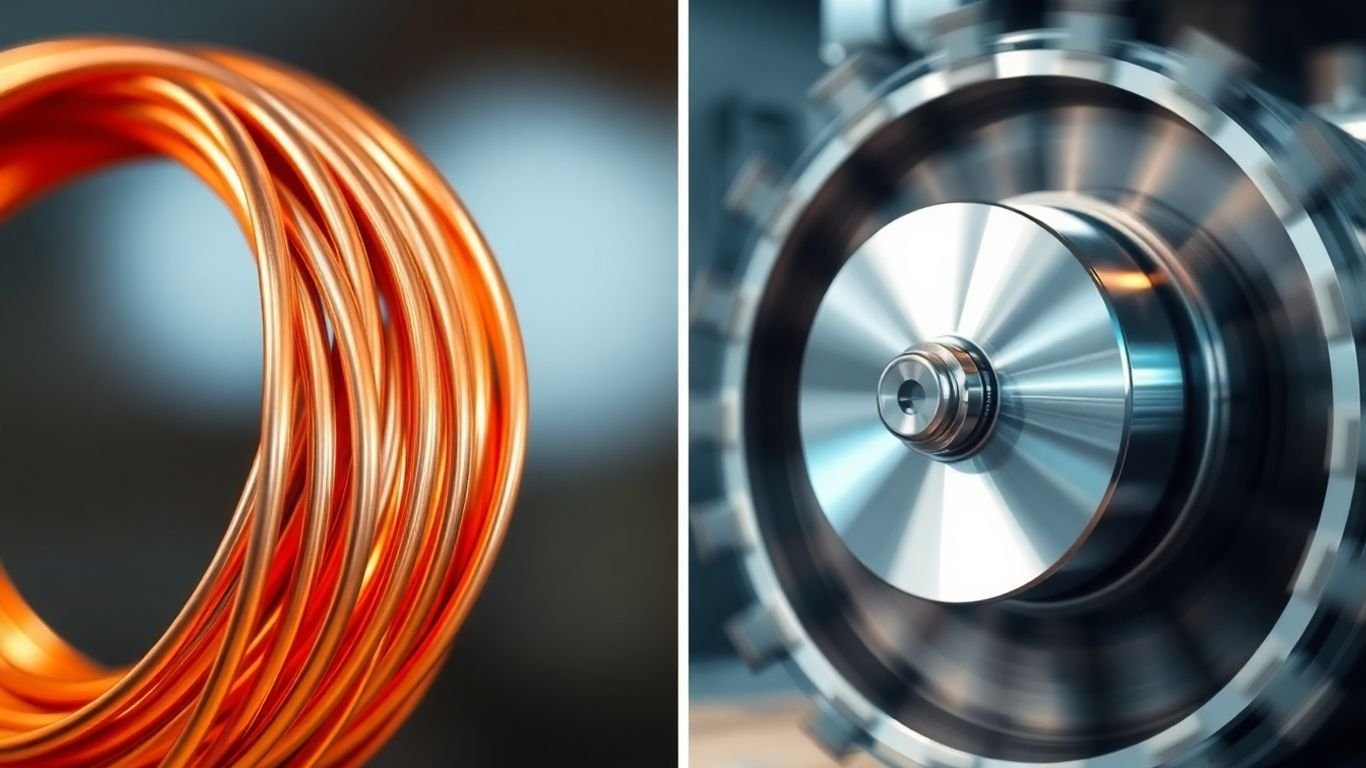

Think of inductance as a kind of electrical inertia. Inductive components, like the coils in motors or transformers, resist changes in current. This resistance creates a phase shift between the voltage and current. In simpler terms, the voltage peaks before the current does. This phase difference is what affects the power factor. A lower power factor means less real power is delivered for the same amount of apparent power.

Higher frequencies tend to have a more pronounced effect from inductance in power lines. This is because inductive reactance, which is the opposition to current flow due to inductance, increases with frequency (XL = 2πfL). So, at 60Hz compared to 50Hz, inductive effects are slightly stronger. This can lead to a poorer power factor over long transmission lines, meaning more energy is lost as heat or in other non-useful forms.

Here’s a quick look at how frequency impacts inductive reactance:

| Frequency | Inductive Reactance (Relative) |

|---|---|

| 50 Hz | 1.0 |

| 60 Hz | 1.2 |

This difference might seem small, but over thousands of miles of power lines, it adds up. It’s one of the reasons why very high frequencies aren’t practical for long-distance power transmission, even though they might allow for smaller transformers. The trade-off is significant.

The choice between 50Hz and 60Hz involved balancing these electrical properties with other factors like the physical size of equipment and historical preferences. It wasn’t a clear-cut win for one over the other, but rather a compromise that worked for the systems being developed at the time.

So, while both frequencies are generally effective, the slight increase in inductive effects at 60Hz is a factor engineers consider, especially when designing systems for long distances or when optimizing the efficiency of inductive loads. It’s a subtle but important detail in the grand scheme of electrical grids, influencing everything from transformer size to overall system losses. Understanding this helps explain why certain frequencies became dominant in different parts of the world, and why adapting equipment between them isn’t always straightforward. You can see how generator speed is tied to frequency on pages discussing the rotation speed of the generator.

Some key points to remember:

- Inductance causes a phase lag between voltage and current.

- Higher frequencies increase inductive reactance.

- A poorer power factor results from increased inductive effects.

- This impacts the efficiency of power transmission over long distances.

19. Aircraft Power Frequencies

When you think about powering up an airplane, you might not immediately consider the frequency of the electricity. But it’s actually a pretty big deal, especially when you’re trying to keep things light and compact. Aircraft often use a higher frequency, like 400 Hz, compared to the 50 Hz or 60 Hz we see on the ground.

Why the switch? Well, it all comes down to efficiency and size. Higher frequencies allow for smaller and lighter transformers and motors. Think about it: a transformer that can handle the same amount of power at 400 Hz can be significantly smaller than one designed for 60 Hz. This is a huge advantage when every ounce and inch counts in an aircraft. It means less weight to carry and more space for passengers or cargo.

However, this higher frequency isn’t really practical for long-distance power transmission. The inductance in the wires really starts to cause problems at these higher frequencies, making it tough to send power over long stretches. So, 400 Hz systems are usually kept within the confines of the aircraft itself, or other similar enclosed spaces like submarines or even some server rooms.

Here’s a quick look at how frequency affects component size:

| Frequency (Hz) | Transformer Size (Relative) | Motor Size (Relative) |

|---|---|---|

| 60 | 1.0 | 1.0 |

| 400 | ~0.5 | ~0.5 |

It’s a trade-off, really. You gain benefits in component size and weight, but you lose the ability to transmit power efficiently over vast distances. The US military even has a standard, MIL-STD-704, that lays out the rules for using 400 Hz power on aircraft, showing just how important this specific frequency is in aviation.

20. Japanese Dual Frequency System

Japan’s electrical grid is a bit unique because it actually uses two different frequencies: 50 Hz and 60 Hz. This situation didn’t happen overnight; it’s a direct result of how Japan initially acquired its power generation technology.

Back in the late 1800s, when electricity was first being rolled out, Japan bought generators from different countries. The eastern part of the country, including Tokyo, got its equipment from AEG in Germany, which was using a 50 Hz standard. Meanwhile, the western part, around Osaka, received generators from General Electric in the United States, which was leaning towards a 60 Hz standard.

This historical split is why Japan still has a divide between its 50 Hz and 60 Hz regions today.

This dual-frequency system means that power needs to be converted between the two standards in certain areas.

- Eastern Japan: Primarily uses 50 Hz.

- Western Japan: Primarily uses 60 Hz.

To manage this, Japan has several high-voltage direct current (HVDC) back-to-back converter stations. These stations are pretty important for making sure power can flow smoothly between the two different frequency zones. Some of the key ones include Shin Shinano, Sakuma Dam, Minami-Fukumitsu, and Higashi-Shimizu.

The existence of these two frequencies isn’t just a historical quirk; it has practical implications for appliance compatibility and grid management. While many modern appliances can handle both frequencies, older or specialized equipment might be sensitive to the frequency difference. It also means that power transmission across the boundary requires careful engineering to maintain stability and efficiency.

21. Economic Factors in Frequency Choice

When it comes to choosing a generator frequency, there’s a whole lot of economic stuff to think about. It’s not just about picking a number; it’s about what makes the most sense financially for the long haul. Back in the day, people had to make tough calls. If you wanted to use transformers and arc lights, a higher frequency, like 60Hz, was better because it meant smaller, cheaper transformers and less noticeable light flicker. But if your main gig was long-distance power lines or running motors, a lower frequency, maybe 25Hz or 50Hz, was often the way to go. It was all about balancing the costs and what worked best for the equipment you were using.

The decision on choosing generator frequency was really a compromise between competing needs.

Here’s a quick look at some of the trade-offs:

- Transformers: Higher frequencies mean smaller, less expensive transformers. Lower frequencies require bigger, costlier ones.

- Motors: Different motors perform better at different frequencies. Some industrial applications might have specific needs that influence the choice.

- Transmission Lines: Higher frequencies can lead to increased losses over long distances, which adds to the cost of electricity. This is why some older systems used lower frequencies for extensive transmission networks.

- Lighting: Early lighting technologies, especially arc lamps, were sensitive to frequency, with lower frequencies causing visible flicker. This pushed for higher frequencies to improve the visual experience.

It’s interesting how historical preferences played a role too. American engineers sometimes leaned towards 60Hz because it fit nicely with other time divisions, while European engineers preferred 50Hz as it aligned with their decimal systems. These aren’t huge technical reasons, but they did influence the path taken. Ultimately, creating a unified system that could handle both lighting and motor loads efficiently helped to streamline electricity production and made the overall economics more favorable.

Running centrifugal pumps at higher frequencies, like 60 Hz compared to 50 Hz, can lead to significantly higher electricity bills, especially in areas with time-of-use pricing or high peak-demand charges. Operating outside of peak usage windows is crucial to manage costs effectively, as discussed in managing pump costs.

The economic landscape of the early 20th century heavily shaped the adoption of standard power frequencies. Manufacturers and utility providers had to weigh the immediate costs of equipment against the long-term operational expenses and the capabilities of emerging technologies. This often led to regional differences in frequency standards based on the dominant industries and infrastructure development at the time.

22. Steam Engine and Turbine Design

When the electrical grid was first being set up, the choice of frequency had a lot to do with the machinery available to generate the power. Early on, steam engines were the big players. These engines turned massive shafts, and the speed they spun at directly influenced the frequency of the electricity produced by the generators attached to them. Think of it like this: the faster the engine spun, the higher the frequency.

It wasn’t just about speed, though. The design of the steam engines and, later, steam turbines, played a role. A generator’s frequency is determined by its rotational speed (RPM) and the number of magnetic poles it has. The formula is pretty straightforward: Frequency = (RPM × Poles) / 120. So, if you had a generator with 4 poles, it would need to spin at 1,800 RPM to produce 60 Hz power, or 1,500 RPM for 50 Hz power.

Here’s a quick look at how pole count affects RPM for different frequencies:

| Poles | RPM at 60 Hz | RPM at 50 Hz |

|---|---|---|

| 2 | 3,600 | 3,000 |

| 4 | 1,800 | 1,500 |

| 6 | 1,200 | 1,000 |

| 8 | 900 | 750 |

| 10 | 720 | 600 |

| 12 | 600 | 500 |

Early engineers had to balance a few things. They wanted a frequency that worked well for lighting (avoiding flicker) and motors, but they also had to work with the steam engines and turbines they had or could build. The physical limitations and design choices of these prime movers heavily influenced the initial frequency standards adopted. For instance, the Niagara Falls project, a really big deal back in the day, ended up using 25 Hz partly because the turbines were already specified to run at 250 RPM. This low frequency was actually better for some early industrial motors and DC conversion equipment, but not ideal for lighting.

The development of more efficient steam turbines, which could operate at higher and more consistent speeds, made higher frequencies like 50 Hz and 60 Hz more practical. These turbines allowed for smaller, lighter generators for the same power output compared to older steam engine designs.

So, you see, it wasn’t just a random number picked out of a hat. The mechanical realities of turning all that electricity into motion, and then turning motion into electricity, really shaped the grid we have today. It’s fascinating how much the early mechanical engineering decisions still affect our power systems. You can read more about the history of the steam turbine and its role in power generation. It’s a story of innovation and compromise, all spinning around those early generators.

23. RPM and Generator Pole Counts

Ever wonder how a generator actually makes that electricity we use every day? It’s all about spinning parts and magnets. The speed a generator’s rotor spins, measured in revolutions per minute (RPM), directly affects the frequency of the alternating current (AC) it produces. This relationship is pretty straightforward: more poles on the generator mean it can spin slower to produce the same frequency, or spin faster with fewer poles for a higher frequency.

Think of it like this: each time a pair of magnetic poles passes a coil, it creates one full cycle of AC. So, if a generator has more pole pairs, it needs fewer rotations to complete the same number of cycles. This is a key factor in understanding the generator frequency comparison between 50Hz and 60Hz systems.

Here’s a look at how RPM and pole counts relate to frequency:

- Two-pole generators: Need to spin at 3600 RPM for 60Hz and 3000 RPM for 50Hz.

- Four-pole generators: Spin at 1800 RPM for 60Hz and 1500 RPM for 50Hz.

- Higher pole counts: Allow for even slower rotation speeds, which can be beneficial for certain types of prime movers, like slower diesel engines.

The formula that ties it all together is: RPM = (120 * Frequency) / Number of Poles. This equation shows that if you want to keep the frequency the same, increasing the number of poles will decrease the required RPM, and vice versa.

This is why you see different designs. For instance, a diesel generator hz explained might use a different pole configuration than a large steam turbine generator. The choice impacts not just the speed but also the physical design and cost of the generator. It’s a balancing act to get the right generator power output difference for the intended application.

The historical adoption of certain frequencies, like 60Hz in North America and 50Hz in Europe, wasn’t just random. It was influenced by the types of prime movers available at the time and the performance characteristics of early electrical equipment, especially motors. Slower engines were more common initially, favoring designs that could produce power at lower RPMs, which meant more poles for a given frequency.

So, while you might think frequency is just a number, it’s deeply tied to the mechanical engineering of the generators themselves. It’s a fascinating interplay between speed, design, and the electricity that powers our world.

24. Skin Effect in Conductors

So, let’s talk about the skin effect. It’s this weird phenomenon where alternating current (AC) doesn’t flow evenly through a conductor. Instead, it tends to concentrate near the surface, or the ‘skin’, of the wire. This effect gets more pronounced as the frequency of the AC signal goes up.

Think of it like this: at 50Hz or 60Hz, the current isn’t just zipping through the middle of the wire. It’s already starting to push outwards. For really high-current applications, especially with thicker wires, this means not all the metal is actually doing useful work. The center of the conductor becomes less efficient.

The higher the frequency, the shallower the depth to which the current penetrates. This is often referred to as the ‘skin depth’.

Here’s a quick look at how frequency impacts skin depth in copper:

| Frequency | Skin Depth (approx.) |

|---|---|

| 60 Hz | ~8.5 mm |

| 50 Hz | ~9.2 mm |

| 100 Hz | ~6.0 mm |

| 1 kHz | ~2.7 mm |

| 1 MHz | ~0.025 mm |

This is why, for very high-frequency applications, you might see wires made of multiple, insulated strands (like Litz wire) or even hollow conductors. For standard 50Hz and 60Hz power transmission, it’s not usually a deal-breaker, but it’s a factor engineers consider, especially for large transmission lines. They might use aluminum-clad steel cables, for instance, because the current flows mainly in the aluminum outer layer, which is good for conductivity, while the steel provides strength. It’s a clever way to manage the physics of electricity, kind of like how off-grid systems use specialized components to manage energy flow.

It’s also why the physical arrangement of materials in conductors matters. If current really preferred the center, you’d build cables differently. The fact that we often see copper on the outside and steel in the middle for strength in some power lines suggests the current is indeed sticking to the outer parts.

25. Urban Legends and Frequency History and more

It’s funny how we just accept that our power runs at 50 or 60 Hertz without really thinking about it. But the history behind these numbers is actually pretty wild, full of competing ideas and even some myths. For a long time, there wasn’t a clear winner, and different places used all sorts of frequencies. You had systems running at 133 Hz, 66.7 Hz, and even some really low ones like 25 Hz, especially for industrial stuff like electric furnaces or early rail systems. It’s wild to think about how much variation there was before things started to settle down.

Some people say that the choice of frequency was purely about efficiency, but it was more complicated than that. Early on, higher frequencies were better for lighting because they reduced flicker, but they caused more losses in transmission lines. Lower frequencies were better for motors and transmission but made lights flicker noticeably. It was a balancing act.

Here’s a look at some of the frequencies that were actually in use:

- 133 Hz: Used for early lighting systems in the UK and Europe.

- 60 Hz: Became common in the US, adopted by many manufacturers.

- 25 Hz: Popular for industrial applications and early electric railways.

- 40 Hz: Seen in places like Jamaica and Belgium.

There are stories, almost like urban legends, about why certain frequencies won out. One popular idea is that Nikola Tesla’s preference for 60 Hz, championed by Westinghouse, clashed with Thomas Edison’s direct current (DC) system. While Tesla was a huge player, the actual adoption of frequencies was a messy, gradual process involving many engineers and companies, not just a single battle of titans. The development of reliable alternators and the needs of different appliances played a massive role in shaping what we use today. It wasn’t just about AC versus DC, but also about which AC was best for the job.

It’s also interesting to note that even after 50 Hz and 60 Hz became dominant, some specialized frequencies stuck around. For instance, certain railway systems still use 16.7 Hz for their power. The whole situation highlights how technological standards evolve through a mix of technical merit, economic factors, and historical circumstances. It’s a reminder that the systems we rely on have a complex past, and sometimes, the simplest answers aren’t the whole story. You can find more about the early days of power transmission on pages discussing historical adoption of frequencies.

So next time you plug something in, remember the decades of experimentation and debate that led to that steady hum.

So, 50Hz or 60Hz: Does It Really Matter?

Ultimately, when you look at the whole picture of 50Hz versus 60Hz power, it’s less about one being inherently better and more about history and regional choices. Both frequencies have been powering our world for a long time, and most of the gadgets we use today can handle either with no problem, maybe needing a simple adapter if you travel. The real difference is in how the power grids and some heavy-duty equipment are designed. For most of us just plugging things in, the frequency is just a number that’s already set for where we live. It’s pretty interesting how these standards came about, though, isn’t it?

Frequently Asked Questions

What’s the main difference between 50Hz and 60Hz power?

The main difference is how many times the electricity switches direction each second. 50Hz means it switches 50 times, and 60Hz means it switches 60 times. This affects how fast motors run and how electrical devices are designed.

Why do different countries use different frequencies?

It’s mostly due to history! When electricity was first being set up, different companies and countries made different choices. For example, America went with 60Hz, while Europe mostly adopted 50Hz. Once a standard was set, it was hard to change because all the equipment was built for it.

Does 60Hz power mean my devices run faster?

Yes, generally. Motors and other devices designed for 60Hz will run about 20% faster than similar devices designed for 50Hz. However, just plugging a 50Hz device into a 60Hz outlet might not work well unless it’s built to handle both.

Is one frequency better than the other?

Neither is inherently better. Both 50Hz and 60Hz work well. 60Hz can be a bit more efficient for sending power over long distances, while 50Hz might be better for local power delivery. The most important thing is that the frequency is steady and reliable.

Can I use my electronics from one country in another?

Sometimes, but not always. Many modern electronics, like phone chargers, can handle both 50Hz and 60Hz. However, some devices, especially older ones or those with motors, might not work correctly or could even be damaged if plugged into a different frequency without a special converter.

Why did early engineers choose these frequencies?

Early choices were a compromise. Engineers considered things like how big transformers needed to be, how efficiently power traveled, and whether lights flickered. 60Hz was chosen in the US partly because it was a nice round number, while in Europe, 50Hz was adopted and became widespread.