AI Energy Consumption: How Much Power Does a Single Prompt Use?

So, you’ve probably heard a lot about AI lately. It’s everywhere, right? From writing emails to making cool pictures, it’s pretty amazing. But have you ever stopped to think about what it takes to make all that happen? Turns out, these smart tools use a surprising amount of power. We’re talking about the energy consumption behind every single question you ask, every image you generate. It’s not just about clever algorithms—it’s also about the electricity needed to keep massive data centers running. This makes AI energy consumption a big topic, and understanding it is becoming more important as these tools become a bigger part of our lives. Let’s break down what’s really going on.

Key Takeaways

- A single text-based AI prompt, like asking ChatGPT a question, uses a small amount of energy, estimated around 0.3 watt-hours. This is much less than earlier estimates suggested.

- While one prompt uses little power, the sheer volume of AI queries globally adds up, leading to significant overall energy demand and impacting power grids.

- The energy used by AI is also tied to water usage for cooling data centers, and the carbon footprint depends heavily on how the electricity is generated (fossil fuels vs. renewables).

- Factors like model complexity, the length of your input and output, and the efficiency of the hardware and data centers all play a role in how much energy AI uses.

- There’s a push for more transparency from AI companies about their energy use, and for developing more energy-efficient AI models and policies to manage this growing demand sustainably.

Understanding AI Energy Consumption Per Prompt

The Electricity Behind Every Query

So, you’re probably wondering, “How much electricity does ChatGPT consume per query?” It’s a question on a lot of people’s minds these days, especially with AI becoming so common. Think of it like this: every time you send a prompt, whether it’s to generate text, an image, or even a short video, the servers behind these AI models are doing a ton of calculations. This process definitely uses energy, and to keep things from overheating, data centers often rely on extensive cooling systems.

Estimates for the electricity cost of running AI models like GPT vary, but a common figure for a typical text-based query is around 0.3 watt-hours. However, this number can jump significantly, maybe to 2.5 to 40 watt-hours, if you’re sending really long inputs. It’s a bit like comparing a quick text message to sending a whole novel – the energy needed is just different.

Here’s a rough idea of how AI prompt energy cost compares between data centers and search engines, though it’s tricky to get exact numbers:

- AI Query (Text-based): ~0.3 – 40 Wh (depending on input/output length)

- Google Search Query: Significantly less, often in the range of 0.0003 kWh (or 0.3 Wh) for a single search, but this can also vary.

It’s important to remember that these are just estimates. Companies like OpenAI don’t always share the exact details about their model parameters or data center operations, making it hard to pin down precise figures. More transparency from AI companies would really help clarify these numbers.

Why Estimates Vary Across AI Models

There are also ways to reduce the energy usage of a single AI prompt. For instance, keeping your prompts concise and to the point can help. Every extra word, even polite additions like ‘please’ and ‘thank you,’ adds a small amount of processing time and thus energy. It might seem minor, but when you multiply that by millions of users, it adds up.

The actual energy consumption for AI tasks is a complex calculation influenced by many factors, including the specific model used, the hardware it runs on, and the length and complexity of the request. While a single prompt might seem small, the cumulative effect across billions of daily interactions is substantial, contributing to the growing energy demands of the tech sector. Understanding these figures is key to discussing the environmental impact of AI, and finding ways to make these powerful tools more sustainable. For those looking into cleaner energy solutions for infrastructure, exploring options like hydrogen-powered generators could be a part of the broader conversation about powering the future responsibly.

Ultimately, the energy used by AI is a growing concern, and efforts are underway to make these models more efficient. It’s a balancing act between the incredible capabilities AI offers and the environmental cost associated with powering it.

Factors Influencing AI’s Power Demands

So, what actually makes an AI gobble up more electricity? It’s not just one thing, but a mix of factors that all play a part. Think of it like trying to cook a meal – the ingredients, the recipe, and the oven all affect how long it takes and how much energy you use.

Model Complexity and Parameter Count

One of the biggest drivers is how complex the AI model itself is. AI models are built with something called parameters, which are basically like the knobs and dials that the AI uses to learn and make decisions. The more parameters a model has, the more intricate its understanding can be, but it also means it needs more computational power to run. Larger models with billions or even trillions of parameters require significantly more energy for every single task they perform. It’s like trying to solve a really complicated math problem versus a simple one; the harder problem needs more brainpower, and in AI’s case, that translates to more electricity.

Input and Output Length Impact

Believe it or not, even how you talk to the AI matters. The length of your request (the input) and the AI’s response (the output) directly affect energy use. A longer prompt means the AI has to process more text, and a longer answer means it has to generate more text. Every extra word, every extra sentence, adds a little bit to the processing time and, consequently, the energy consumed. Some researchers have even pointed out that polite phrases like ‘thank you’ or ‘please’ add to this, though their individual impact is tiny, it adds up when you have millions of people using AI.

Hardware Efficiency and Data Center Operations

Where the AI runs also makes a big difference. AI models live in data centers, which are essentially huge buildings filled with powerful computers. The efficiency of the hardware in these data centers – the servers, the processors – plays a huge role. Newer, more efficient hardware can perform tasks using less electricity. Beyond the computers themselves, data centers need a lot of energy for cooling systems to keep everything from overheating. The location of the data center also matters; a hot climate means the cooling systems have to work much harder, using more power.

The energy needed for AI isn’t just about the AI model itself; it’s also about the infrastructure that supports it. This includes the power needed to keep servers running 24/7, the energy for cooling systems, and the electricity used for networking and data transfer. All these components contribute to the overall energy footprint.

Here’s a simplified look at how these factors can influence energy use:

- Model Size: Larger models (more parameters) generally use more energy.

- Task Complexity: More complex tasks require more processing, thus more energy.

- Data Center Location: Hotter climates require more energy for cooling.

- Hardware Age: Older hardware is typically less energy-efficient than newer models.

- Prompt Length: Longer inputs and outputs increase processing time and energy use.

The Broader Impact of AI Energy Consumption

It’s easy to get caught up in the numbers for a single prompt, but the real story of AI’s energy use is much bigger. Think about it: AI isn’t just a tool you pull out occasionally anymore. It’s being woven into the fabric of our daily digital lives, from how we search for information to how we manage our schedules. This widespread integration means the energy demands are stacking up, and it’s starting to reshape our power grids.

AI’s Growing Demand on Power Grids

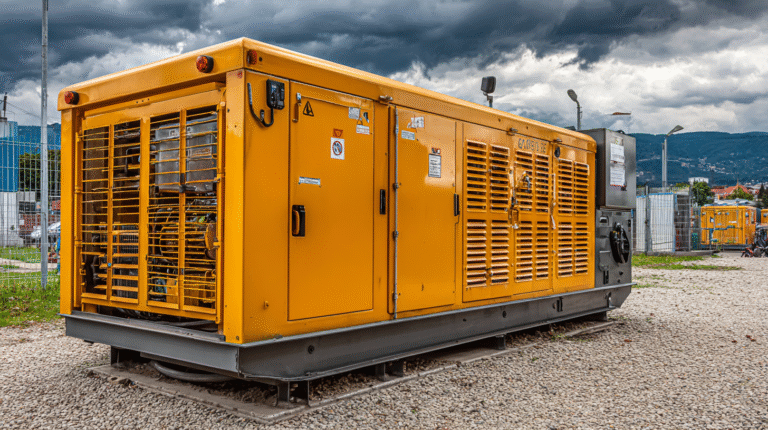

Big tech companies are making massive investments in AI infrastructure, including building new data centers. Some are even looking into new nuclear power plants to meet the anticipated energy needs. This isn’t just about keeping up with current demand; it’s about preparing for a future where AI is even more pervasive. For instance, data centers, which house the powerful computers that run AI, have already doubled their electricity consumption in recent years, largely due to AI hardware. Currently, data centers account for a significant portion of the US energy consumption, and projections show AI’s share of this will only grow, potentially consuming as much electricity as millions of households in the coming years.

Data Center Cooling and Water Usage

Running these powerful AI systems generates a lot of heat. To keep the servers from overheating, data centers rely heavily on cooling systems. These systems, in turn, consume a substantial amount of energy. Beyond electricity, cooling often requires significant amounts of water, raising concerns about water scarcity in certain regions. Optimizing these cooling processes is becoming a key focus for improving the overall efficiency of AI operations. Innovations in waste-to-energy systems, for example, are being explored to manage the byproducts of powering these centers, aiming to make the entire process more sustainable.

The Role of Fossil Fuels in AI’s Carbon Footprint

When we talk about AI’s energy consumption, we also have to consider where that energy comes from. Many electrical grids still rely heavily on fossil fuels. This means that the electricity used by data centers, and therefore by AI, contributes to greenhouse gas emissions. The carbon intensity of the electricity powering AI operations can be significantly higher than the average grid. As AI use expands, the reliance on these carbon-intensive energy sources becomes a more pressing issue, directly impacting the climate. Finding ways to power AI with cleaner energy sources is a major challenge for the industry.

The rapid expansion of AI capabilities and its integration into everyday applications means that the energy required to power this revolution is substantial. While individual AI queries might seem minor, their cumulative effect, coupled with the energy-intensive nature of AI model training and operation, presents a significant challenge to our existing energy infrastructure and environmental goals. The choices made today about energy sources and efficiency will have long-lasting consequences.

Quantifying AI’s Environmental Toll

So, we’ve talked about how much power a single AI prompt might use, but what does that really mean for the planet? It’s not just about the electricity; it’s about where that electricity comes from and the ripple effects it has.

Carbon Intensity of Electricity Generation

Think about it: data centers need a lot of power. If that power comes from burning coal or natural gas, then every AI query, every image generated, contributes to greenhouse gas emissions. The source of the electricity is a massive factor in AI’s carbon footprint. Even a small amount of energy used per prompt can add up quickly when you consider the sheer volume of AI requests happening globally. For instance, a single text prompt might produce around 0.03 grams of CO2 equivalent, but when you multiply that by billions of daily users, the numbers become significant. It’s like a leaky faucet – one drop seems tiny, but over time, it wastes a lot of water.

Regional and Temporal Variations in Emissions

It’s not the same story everywhere, though. The carbon intensity of electricity varies wildly depending on where you are in the world. Some regions rely heavily on renewable energy sources like solar and wind, which have a much lower carbon impact. Others are still very dependent on fossil fuels. This means that an AI query run in a region powered by clean energy will have a far smaller environmental toll than one run in a region that burns a lot of coal. Plus, the grid’s carbon intensity can change throughout the day, depending on energy demand and the mix of power sources available. This makes pinpointing an exact, universal carbon cost for AI tricky.

The Cumulative Effect of Widespread AI Use

When you start adding up all those individual prompts, image generations, and AI-assisted tasks, the total energy demand and associated emissions become substantial. It’s easy to dismiss the impact of a single query, but as AI becomes more integrated into everything from search engines to everyday apps, the collective demand grows. This increasing demand puts pressure on power grids and can influence energy policy. Some companies are even looking at building massive new data centers, which could require power on the scale of entire states. It’s a bit like how individual choices to drive gas-guzzling cars add up to a global climate problem; widespread AI use has a similar cumulative effect on energy resources and emissions. We need to be mindful of this as AI technology continues to expand, and look for ways to make its growth more sustainable, perhaps by choosing more efficient models for tasks, like using a smaller model for simpler questions [c44f].

The challenge lies in balancing the rapid advancement and adoption of AI with the planet’s capacity to sustain its energy needs without exacerbating climate change. Transparency from AI developers about their energy usage and carbon output is a key step towards addressing this issue effectively.

Improving AI Energy Efficiency

It’s becoming pretty clear that AI uses a good chunk of power, and as it gets more popular, we’ve got to figure out how to make it use less. It’s not just about the prompts themselves, but the whole system behind them. We need more transparency from the companies making these AI tools to really get a handle on the energy picture.

The Need for Greater Transparency from AI Companies

Right now, it’s tough to get solid numbers on how much energy AI actually uses. Companies are often tight-lipped about the specifics of their models, like how many parameters they have or which data centers they use. This makes it hard for researchers and the public to accurately measure and compare the energy footprint of different AI applications. Without this information, it’s like trying to fix a leaky faucet without knowing where the leak is coming from. We need AI developers to share more data so we can make informed choices about which tools to use and how to use them more efficiently. It’s similar to how we have energy ratings on appliances; we need something similar for AI models.

Developing Energy-Efficient AI Models

Not all AI models are created equal when it comes to power use. Some tasks don’t need the biggest, most complex models. For instance, using a smaller, more specialized model for simple questions can drastically cut down on energy consumption compared to using a massive, general-purpose one.

Researchers are working on creating models that are just as accurate but use significantly less power. Think about it like using the right tool for the job – you wouldn’t use a sledgehammer to crack a nut. Choosing the right model for each task is a simple yet effective way to reduce the overall energy demand. Some studies show that models that perform similar tasks can have wildly different energy needs, sometimes by a factor of 40 or more!

Policy Frameworks for Sustainable AI

Beyond individual choices and model development, we also need broader policies to guide AI’s energy use. Imagine if there were standards, like an energy efficiency score for AI, similar to what we see on refrigerators or washing machines. This could push companies to prioritize efficiency in their designs and operations. It might mean that AI services used by millions of people would need to meet a certain energy performance level. This kind of regulation could help prevent the energy grid from being overwhelmed as AI use continues to grow. It’s also important to consider where the electricity comes from; using AI powered by renewable energy sources makes a big difference in its environmental impact.

From Data Centers to Hardware: Regulating AI’s Energy Use

For example, if data centers could be powered by solar energy, it would significantly reduce the carbon footprint associated with AI computations. We need to think about the entire lifecycle, from manufacturing the hardware to powering the data centers, and how to make it all more sustainable. It’s also worth noting that the efficiency of the underlying hardware, like GPUs, plays a big role, and newer, more specialized chips, while powerful, can also be more power-hungry. Making sure these chips are used wisely is key. We also need to consider the impact of cooling systems in data centers, which can use a lot of energy and water.

Some research suggests that the actual energy used by AI systems might be double what’s reported, once cooling and other infrastructure are factored in. This highlights the need for a holistic approach to AI energy efficiency, looking at everything from the code to the building it runs in. It’s a complex problem, but one we have to tackle if we want AI to grow responsibly. For instance, optimizing generator usage in data centers, much like ensuring diesel generators operate at peak efficiency, is a practical step that can be taken to manage loads properly.

Making AI more energy-efficient isn’t just a technical challenge; it’s a societal one. It requires collaboration between researchers, developers, policymakers, and users to create a future where AI innovation doesn’t come at an unacceptable environmental cost.

The Future of AI and Energy Demand

It feels like AI is everywhere now, doesn’t it? From helping with homework to writing code, it’s becoming a big part of our daily lives. But all this convenience comes with a cost, and that cost is energy. We’re talking about a massive shift in how we use power, and it’s happening fast.

Projected AI Energy Usage by 2030

Predicting the future is tricky, especially with something as new and fast-moving as AI. However, some reports suggest that by 2030, AI could be using a significant chunk of our electricity. Think about it: more personalized AI agents, AI helping with complex problem-solving, and AI integrated into almost every app we use. This means more data centers, more powerful hardware, and a lot more energy consumption. Some estimates show that AI could account for over half the electricity used by data centers in the US by 2028. That’s a huge jump from where we are now.

The Challenge of Scaling AI Infrastructure

Building out the infrastructure to support this AI boom is a massive undertaking. Tech giants are already investing billions in new data centers and AI hardware. For example, Google plans to spend $75 billion on AI infrastructure in 2025 alone. OpenAI and others are looking at building huge data centers that could demand power comparable to entire states. This rapid expansion puts a strain on existing power grids and requires careful planning to ensure we have enough reliable energy. It’s not just about building more servers; it’s about where that power comes from and how it’s delivered. We’re seeing moves to build new nuclear power plants, which highlights the scale of the energy needs. The development of advanced energy storage solutions, like those found in the Next-Gen Energy Matrix, will be key to managing these new demands [a63d].

Balancing Innovation with Environmental Responsibility

So, what’s the plan? It’s a tough balancing act. We want the benefits of AI, but we also need to be mindful of its environmental impact. Right now, there’s a real lack of transparency from AI companies about their energy use and the sources of that energy. This makes it hard for researchers and policymakers to plan effectively.

Here are some key areas we need to focus on:

- Transparency: AI companies need to share more data about their energy consumption and carbon footprint.

- Efficiency: Developing AI models that require less power to train and run is vital.

- Renewable Energy: Powering AI infrastructure with clean, renewable energy sources is a must.

- Policy: Governments need to create frameworks that encourage sustainable AI development and deployment.

The energy demands of AI are growing rapidly, and the way we power this growth will shape our energy future for years to come. It’s not just about individual queries anymore; it’s about the massive infrastructure being built to support AI’s expanding capabilities. We need to make smart choices now to ensure that AI’s progress doesn’t come at an unacceptable environmental cost.

So, What’s the Bottom Line?

It’s clear that while a single AI prompt might not drain your home’s power grid, the collective demand is significant and growing fast. We’ve seen that the energy used for one query is pretty small, maybe like running an LED bulb for a bit, but when millions of us are asking AI questions all day, every day, it adds up.

Plus, the source of that electricity matters a lot for the environment. As AI becomes more common in everything we do online, understanding its energy footprint is important. Companies need to be more open about their energy use, and we all might need to think about how and when we use these powerful tools. It’s a complex picture, but one we definitely need to keep an eye on as AI continues to change our world.

Frequently Asked Questions

How much energy does a single AI prompt actually use?

It’s not a huge amount for just one prompt, maybe like the energy a small light bulb uses for a short time. Think about 0.3 watt-hours for a simple text question. But, if you ask it to do something more complex or give it a lot of information, it can use more power.

Is using AI like ChatGPT bad for the environment?

While one prompt doesn’t use much energy, the big picture is that lots of people using AI a lot can add up. This is because the electricity used to power the computers that run AI often comes from burning fuels that harm the planet. So, while your single use might be small, the total effect can be significant.

How does AI use water?

The computers that run AI get really hot. To keep them cool, data centers often use water. This water absorbs the heat and then evaporates in cooling towers. So, even though the AI isn’t directly drinking water, the process of keeping its computers from overheating uses a lot of it.

Why is it hard to know exactly how much energy AI uses?

Companies that make AI don’t always share all the details about how their systems work. We don’t know exactly how powerful their computers are, how much energy they use, or how they manage all the requests. This makes it tricky to get exact numbers, and many estimates are just educated guesses.

Can I do anything to make my AI use more energy-efficient?

Yes, you can! Try to keep your prompts clear and to the point. Avoid unnecessary words like ‘please’ and ‘thank you’ if you’re really concerned about energy use, as every extra word takes a bit more processing. Also, using AI during times when the power grid isn’t as busy, like avoiding peak hours, can help a little.

Will AI use a lot more energy in the future?

Experts think AI will likely use much more energy as it becomes more popular and is used in more ways. Some predictions suggest it could use a significant amount of electricity in the coming years. This is why people are looking for ways to make AI more energy-efficient and use cleaner electricity sources.